Joseph D’Aleo here

Introduction

Virtually every month and year we see reports that proclaim the global data among the warmest in the entire record back to 1895 or earlier. But the efforts to assess changes to the climate are very young and beset with many issues.

The first truly global effort to measure atmospheric temperatures began with the help of satellite infrared sensing in 1979.

The first U.S. station based data set and monthly analysis was launched in the late 1980s and for the global in 1992. These datasets are products of simulation models and data assimilation software, not solely real data.

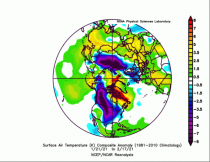

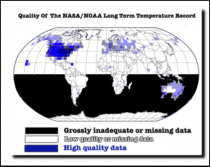

One of the key issues is Spatial Bias - the density of observation stations varied greatly on the global scale. Dr. Mototaka Nakamura in his book “Confessions of a climate scientist: the global warming hypothesis is an unproven hypothesis” writes that over the last 100 years “only 5 percent of the Earth’s area is able show the mean surface temperature with any certain degree of confidence. “A far more serious issue with calculating ‘the global mean surface temperature trend’ is the acute spatial bias in the observation stations. There is nothing they can do about this either. No matter what they do with the simulation models and data assimilation programs, this spatial bias before 1980 cannot be dealt with in any meaningful way.”

On top of this spatial bias:

* Missing monthly data from existing stations for 20-90% of the stations globally requiring model infilling, sometimes using data from stations hundreds of miles away

* Station siting not to specifications (US GAO found 42% U.S. stations needed to remedy siting) with serious warm biases

* Airport sensor systems were designed for aviation and not climate allowing temperature errors up to 1.9F

* Adjustments to early record occur with every new update, each cooling early data in the record, causing more apparent warming.Dr. Nakamura blasts the ongoing data adjustments: “Furthermore, more recently, experts have added new adjustments which have the helpful effect of making the Earth seem to continue warming”. He deems this “data falsification”.

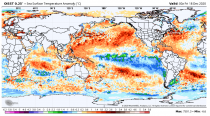

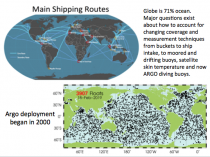

* Oceans which cover 71% of the globe (81% of the Southern Hemisphere) had data limited to shipping lanes, mainly in the northern hemisphere until 50km nighttime satellite ocean skin temperatures measurement became available after 1984 and the ARGO 4000 buoy global network after 2000.

Attempting to compile a ‘global mean temperature’ from ever changing, fragmentary, disorganized, error-ridden, geographically unbalanced data with multi-decadal evidence of manipulation does not reach the level of the IQA quality science required for the best possible policy decision making. We went from recognizing the serious observation limitations into the late 20th century to making claims we can state with confidence how we rank each month for the US and globe to tenths of a degree back into the 1800s.

Dr. Nakamura commented at NTZ: “So how can we be sure about the globe’s temperatures, and thus it’s trends before 1980? You can’t. The real data just aren’t there.Therefore, the global surface mean temperature change data no longer have any scientific value and are nothing except a propaganda tool to the public.”

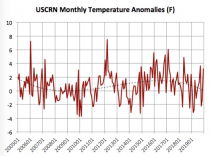

The most prudent step to take for our nation would focus on satellite data and CRN and USRCRN, if funded, for land and ARGO buoy data for the oceans and assess the changes over the next few decades before taking radical steps to cancel our country’s hard fought and finally achieved energy independence

Temperature Measurement Timeline Highlights

We hear every month and year claims about record setting warmth back into the 1800s. But see below how recently we attempted to reconstruct the past - scraping together segments of data and using models and adjustments to create the big picture. This was especially difficult over the oceans which cover 71% of the earth’s surface.

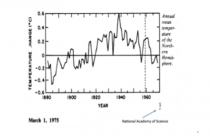

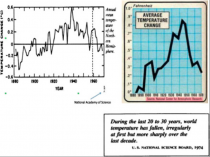

1975 - National Academy of Science makes first attempt at determining global temperatures and trend, which they limited to the Northern Hemisphere land areas (U.S. and Europe). This was because they recognized reliable date on a larger scale and over the ocean was just not available or trustworthy. The data they were able to access showed a dramatic warming from the 1800s to around 1940 then a reversal ending to the late 1970s roughly eliminating the nearly 60 years of warming. The CIA warned that the consensus of scientists was we might be heading towards a dangerous new ice age.

1978 - New York Times reported there was too little temperature data from the Southern Hemisphere to draw any reliable conclusions. The report they references was prepared by German, Japanese and American specialists, and appeared in the December 15 issue of Nature, the British journal. It stated that “Data from the Southern Hemisphere, particularly south of latitude 30 south, are so meager that reliable conclusions are not possible,” the report says. “Ships travel on well-established routes so that vast areas of ocean, are simply not traversed by ships at all, and even those that do, may not return weather data on route.”

1979 - Global satellite temperature measurement of the global atmosphere begins at UAH and RSS.

1981 - NASA’s James Hansen et al reported that “Problems in obtaining a global temperature history are due to the uneven station distribution, with the Southern Hemisphere and ocean areas poorly represented” (Science, 28 August 1981, Volume 213, Number 4511(link))

1989 - At that time, in response to the need for an accurate, unbiased, modern historical climate record for the United States, personnel at the Global Change Research Program of the U.S. Department of Energy and at NCEI defined a network of 1219 stations in the contiguous United States whose observation would comprise a key baseline dataset for monitoring U.S. climate. Since then, the USHCN (U.S. Historical Climatology Network) dataset has been revised several times (e.g., Karl et al., 1990; Easterling et al., 1996; Menne et al. 2009). The three dataset releases described in Quinlan et al. 1987, Karl et al., 1990 and Easterling et al., 1996 are now referred to as the USHCN version 1 datasets.

The documented changes that were addressed include changes the time of observation (Karl et al. 1986), station moves, and instrument changes (Karl and Williams, 1987; Quayle et al., 1991). Apparent urbanization effects were also addressed in version 1 with a urban bias correction (Karl et al. 1988)

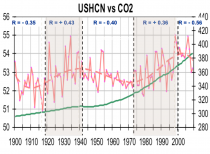

Tom Karl wrote with Kukla and Gavin in a 1986 paper on Urban Warming: “MeteoSecular trends of surface air temperature computed predominantly from [urban] station data are likely to have a serious warm bias...The average difference between trends [urban siting vs. rural] amounts to an annual warming rate of 0.34C/decade (3.4C/century)… The reason why the warming rate is considerably higher [may be] that the rate may have increased after the 1950s, commensurate with the large recent growth in and around airports. Our results and those of others show that the urban growth inhomogeneity is serious and must be taken into account when assessing the reliability of temperature records.”

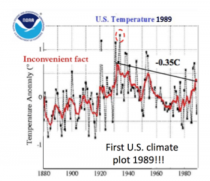

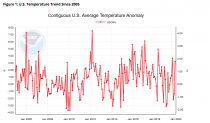

Here was an early 1989 plot of USHCNv1.

Enlarged

1989 - The NY Times reported the US Data “failed to show warming trend predicted by Hansen in 1980.”

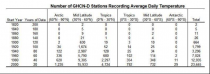

1992 - NOAA’s first global monthly assessment began (GHCNm - Vose). Subsequent releases include version 2 in 1997(Peterson and Vose, 1997), version 3 in 2011 (Lawrimore et al. 2011) and, most recently, version 4 (Menne et al. 2018). For the moment, GHCNm v4 consists of mean monthly temperature data only.

1992 - The National Weather Service (NWS) Automated Surface Observing System (ASOS), which serves as the primary data source for more than 900 airports nationwide and is utilized for climate data archiving was deployed in the early 1990’s. Note the criteria specified a Root-mean-square-error (RMSE) of 0.8F and max error of 1.9F. ASOS was designed to supply key information for aviation such as ceiling visibility, wind, indications of thunder and icing. They were NOT designed for assessing climate.

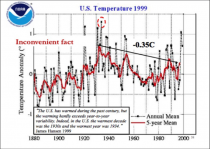

1999 - The USHCN temperature still trailed 1934 as it had a decade earlier - James Hansen noted “The U.S. has warmed during the past century, but the warming hardly exceeds year-to-year variability. Indeed, in the U.S. the warmest decade was the 1930s and the warmest year 1934.” When asked why the discrepancy, Hansen said the US was only 2% of the world and both could be right.

Here was the 1999 plot.

2000 - A network of nearly 4000 diving buoys (ARGO) were deployed world wide to provide the first reliable real-time, high resolution monitoring of ocean temperatures and heat content.

2004 - National Climate Reference Network was launched with guidance from John Christy of UAH who had a pilot network in Alabama where he was State Climatologist to provide uncontaminated temperatures in the lower 48 states. The 114 stations met the specifications that kept them away from local heat sources.

2005 - Pielke and Davey (2005) found a majority of stations, including climate stations in eastern Colorado, did not meet requirements for proper siting. They extensively documented poor siting and land-use change issues in numerous peer-reviewed papers, many summarized in the landmark paper “Unresolved issues with the assessment of multi-decadal global land surface temperature trends” (2007).

2007 - A new version of the U.S. climate network, USHCNv2 had adjustments including Karl’s adjustment for urban warming’. David Easterling, Chief Scientific Services Division for NOAA’s Climate Center expressed concern in a letter to James Hansen at NASA “One fly in the ointment, we have a new adjustment scheme for USHCNv2 that appears to adjust out some, if not all of the local trend that includes land use change and urban warming”.

2008 - In a volunteer survey project, Anthony Watts and his more than 650 volunteers at http://www.surfacestations.org found that over 900 of the first 1,067 stations surveyed in the 1,221 station U.S. climate network did not come close to the specifications as employed in Climate Reference Network (CRN) criteria. Only about 3% met the ideal specification for siting. They found stations located next to the exhaust fans of air conditioning units, surrounded by asphalt parking lots and roads, on blistering-hot rooftops, and near sidewalks and buildings that absorb and radiate heat. They found 68 stations located at wastewater treatment plants, where the process of waste digestion causes temperatures to be higher than in surrounding areas. In fact, they found that 90% of the stations fail to meet the National Weather Service’s own siting requirements that stations must be 30 m (about 100 feet) or more away from an artificial heating or reflecting source.

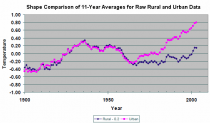

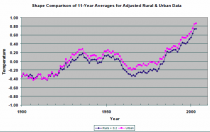

2009 - NASA’s Dr. Edward R. Long in a 2009 analysis looked at the new version of the US data. Both raw and adjusted data from the NCDC (now NCEI) was examined for a selected Contiguous U.S. set of rural and urban stations, 48 each or one per State. The raw data provides 0.13 and 0.79 C/century temperature increase for the rural and urban environments, consistent with urban factors. The adjusted data provides 0.64 and 0.77 C/century respectively.

Comparison of the adjusted data for the rural set to that of the raw data shows a systematic treatment that causes the rural adjusted set’s temperature rate of increase to be 5-fold more than that of the raw data. This suggests the consequence of the NCDC’s protocol for adjusting the data is to cause historical data to take on the time-line characteristics of urban data. The consequence intended or not, is to report a false rate of temperature increase for the Contiguous U. S., consistent with modeling utilizing the Greenhouse theory.

2010 - A 2009 review of temperature issues was published by a large group of climate scientists entitled Surface Temperature Records: A Policy Driven Deception .

2010 - A landmark study Analysis of the impacts of station exposure on the U.S. Historical Climatology Network temperatures and temperature trends followed, authored by Souleymane Fall, Anthony Watts, John Nielsen-Gammon, Evan Jones, Dev Niyogi, John R. Christy, Roger A. Pielke Sr represented years of work in studying the quality of the temperature measurement system of the United States.

2010 - In a review sparked by this finding, the GAO found “42% of the active USHCN stations in 2010 clearly did not meet NOAA’s minimum siting standards. Whatsmore, just 24 of the 1,218 stations (about 2 percent) have complete data from the time they were established.”

2011 - A paper ”A Critical Look at Surface Temperature Records” was published in Elsevier’s “Evidence-Based Climate Science”.

2013 - NOAA responded to papers on siting and GAO admonition by removing and/or replacing the worst stations. Also in monthly press releases no satellite measurements are ever mentioned, although NOAA claimed that was the future of observations.

2013 - Richard Muller releases The Berkeley Earth Surface Temperatures (BEST) set of data products, originally a gridded reconstruction of land surface air temperature records spanning 1701-present, and including an 1850-present merged land-ocean data set that combines the land analysis with an interpolated version of HadSST3. Homogenization is heavily used and seasonal biases prior to Stevenson Screens in the early record are acknowledged.

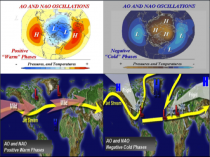

2015 - A pause in warming that started around 1997 was finally acknowledged in the journal Nature by IPCC Lead Author Kevin Trenberth and attributed to cyclical influences of natural factors like El Nino, ocean cycles on global climate. The AMS Annual Meeting in 2015 had 3 panels to address ‘the pause’.

2016 - The study of Tom Karl et al. 2015 purporting to show no ‘hiatus’ in global warming in the 2000s (Federal scientists say there never was any global warming “pause"). John Bates who spent the last 14 years of his career at NOAA’s National Climatic Data Center as a Principal Scientist commented “in every aspect of the preparation and release of the datasets leading into Karl 15, we find Tom Karl’s thumb on the scale pushing for, and often insisting on, decisions that maximize warming and minimize documentation. The study drew criticism from other climate scientists, who disagreed with Karl’s conclusion about the ‘hiatus.’

2017 - A new U.S. climate data set nClimDiv with climate division model reconstructions and statewide averages was gradually deployed and replaced USHCNv2. The result was NOAA gave 40 out of 48 states ‘new’ warming. The Drd964x decadal CONUS warming rate from 1895 to 2012 was 0.088F/decade. The new nClimDiv rate from 1895 to 2014 is 0.135F/decade, almost double. Though it makes the job of analysts and data access more flexible it was at the expense of accuracy as demanded by the IQA.

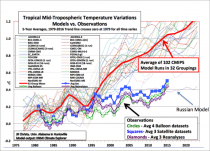

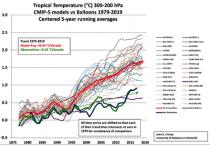

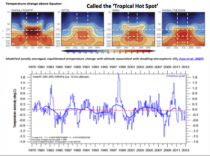

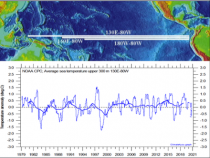

2017 - Landmark studies were published which proved conclusively that the steadily rising Atmospheric CO2 Concentrations had no statistically significant impact on any of the 14 temperature time series (at the surface and in the atmosphere) and showed the so called greenhouse induced Tropical Hot Spot caused by rising atmospheric CO2 levels, simply does not exist in the real world.

2019 - Climate Scientist Dr. Mototaka Nakamura in his book “Confessions of a climate scientist: the global warming hypothesis is an unproven hypothesis” writes that over the last 100 years “only 5 percent of the Earth’s area is able show the mean surface temperature with any certain degree of confidence. “A far more serious issue with calculating ‘the global mean surface temperature trend is the acute spatial bias in the observation stations. There is nothing they can do about this either. No matter what they do with the simulation models and data assimilation programs, this spatial bias before 1980 cannot be dealt with in any meaningful way.” “So how can we be sure about the globe’s temperatures, and thus it’s trends before 1980? You can’t. The real data just aren’t there. Therefore, the global surface mean temperature change data no longer have any scientific value and are nothing except a propaganda tool to the public.”

2020 - The U.S. Regional Climate Reference Network (USRCRN) pilot program (2011) had goals to maintain the same level of climate science quality measurements as the national-scale U.S. Climate Reference Network (USCRN), but its stations would be spaced more closely and focus solely on temperature and precipitation. After a pilot project in the Southwest, USRCRN stations were to be deployed at a 130 km spatial resolution across the United States to provide for the detection of regional climate change signals. If NOAA had advanced this, America would for the first time ever have a data set that could be relied on for policy making after a few decades. Even then, the new data will not replace the old data with all its issues and uncertainties, which are not appropriate for policy decisions. It appears this program will not be advanced under this administration.

2020 - Taishi Sugiyama of Japan’s The Canon Institute for Global Studies working paper on our climate system: The Earth Climate System as Coupled Nonlinear Oscillators under Quasi-Periodical Forcing from the Space (this includes ENSO and solar). We could not agree more. See peer reviewed comments on these natural factors here.

Thanks to CliimateChangeDispatch.com

That the latest World Weather Attribution (WWA) post, Rapid attribution analysis of the extraordinary heatwave on the Pacific Coast of the US and Canada June 2021, has twenty-one contributors from prestigious research groups around the world gave it even more piquancy. What a treat! I had not been so flummoxed since reading Alan Sokal’s scholarly hoax over two decades ago: “Transgressing the Boundaries: Towards a Transformative Hermeneutics of Quantum Gravity.”

The WWA post, alas, is neither hoax nor parody, but the real deal: a collaboration - in record time, no less - “to assess to what extent human-induced climate change made this heatwave hotter and more likely.” Whether ‘human-induced climate change"- whatever that is - was present at all was not on the menu.

So it’s down the rabbit hole of questionable-cause logical fallacies in search of an answer: post hoc ergo propter hoc: ‘after this, therefore because of this’; “since event Y followed event X, event Y must have been caused by event X”; or if you prefer, cum hoc ergo propter hoc: ‘with this, therefore because of this’. A rooster crowing before sunrise does not mean it caused the sun to rise. A lot of cocks crowing before a big conference, however, could cause an increase in the flow of money into the Green Climate Fund. Cock-a-doodle-do.

Whatever the case, we clearly need a New Law of Climate Change: Climate alarmism (CA) increases exponentially as time, T, to the next United Nations Conference of the Parties (COP) or atmospheric Armageddon (AA) declines to zero; where CA is measured by the frequency of MSM and social media amplification occurring in a specific period of observation, P.

As for the “extraordinary heatwave” last month, when competition with COVID threatens to steal your thunder it pays to be as quick as greased lightning to trumpet panic and hyperbole. The paint was barely dry on June 2021, when WWA concluded that while:

An event such as the Pacific Northwest 2021 heatwave is still rare or extremely rare in today’s climate, yet would be virtually impossible without human-caused climate change. As warming continues, it will become a lot less rare. You might wonder how WWA could distinguish “human-caused climate change” from the weather over such a short period, and determine “how much less severe” the heatwave “would have been in a [computer-generated] world without human-caused climate change.”

Well, it used published peer-reviewed methods to analyze maximum temperatures in the region most affected by the heat (45-52N, 119-123W). Yet “the Earth is large and extreme weather occurs somewhere almost every day.” So which EWEs merit an attribution study? WWA prioritizes those that “have a large impact or provoke strong discussion,” so that its “answers will be useful for a large audience.”

For WWA the heatwave was a “strong warning” of worse to come:

Our results provide a strong warning: our rapidly warming climate is bringing us into uncharted territory that has significant consequences for health, well-being, and livelihoods. Adaptation and mitigation are urgently needed to prepare societies for a very different future. Adaptation measures need to be much more ambitious and take account of the rising risk of heatwaves around the world, including surprises such as this unexpected extreme… In addition, greenhouse gas mitigation goals should take into account the increasing risks associated with unprecedented climate conditions if warming would be allowed to continue. (media release, 7 July 2021)

It included two qualifications:

It is important to highlight that, because the temperature records of June 2021 were very far outside all historical observations, determining the likelihood of this event in today’s climate is highly uncertain.

Based on this first rapid analysis, we cannot say whether this was a so-called “freak” event (with a return time on the order of 1 in 1000 years or more) that largely occurred by chance, or whether our changing climate altered conditions conducive to heatwaves in the Pacific Northwest, which would imply that “bad luck” played a smaller role and this type of event would be more frequent in our current climate.

Yet WWA still concluded that:

In either case, the future will be characterized by more frequent, more severe, and longer heatwaves, highlighting the importance of significantly reducing our greenhouse gas emissions to reduce the amount of additional warming. This kind of science might be alright as an academic game with complex computer models. During the past decade, however, so-called “rapid attribution analysis” has moved outside its core business into climate politics. Researchers have become activists. Gaming uncertainty is the only game in town and the profession knows how to play it. Its media releases are a key driver of the UN’s multi-trillion dollar “ambition” to monetize “climate change” and greenmail the developed world. Did WWA assess all the factors, including natural variability? Not according to the Cliff Mass Weather Blog:

Society needs accurate information in order to make crucial environmental decisions. Unfortunately, there has been a substantial amount of miscommunication and unscientific hand-waving about the recent Northwest heatwave. This blog post uses rigorous science to set the record straight… It describes the origins of a meteorological black swan event and how the atmosphere is capable of attaining extreme, unusual conditions without any aid from our species.

It ultimately comes down to the modeling. Is it meaningful or meaningless? WWA’s “validation criteria” assessed the similarity between the modeled and observed seasonal cycle and other factors. The outcomes were described as “good”, “reasonable” or “bad”. All the “validation results” appear in Table 3 of the WWA analysis. Of the 36 models used, the results from nine were deemed “bad” (25%), 13 were “reasonable” (36%), and the remaining 14 were “good” (39%).

In a 2009 paper by Reno Knutti, et al., Challenges in combining projections from multiple climate models, the five authors stressed that there is little agreement on metrics to separate “good” and “bad” models, and there is concern that model development, evaluation and posterior weighting or ranking are all using the same datasets. In what other field would it be legitimate to select only the models merely considered “good”, or to average them in some way, then claim the process produces an acceptable approximation to the truth and reality? Imagine how the public would react to a COVID vaccine with an efficacy of only 39 percent.

How did we get to this point? It all began with ACE, the Attribution of Climate-related Events initiative. ACE’s inaugural meeting was held in Boulder, Colorado, on January 26, 2009, at the National Center for Atmospheric Research (NCAR). ACE released a four-paragraph statement. Its mission would be “to provide authoritative assessments of the causes of anomalous climate conditions and EWEA”, presumably for the Intergovernmental Panel on Climate Change (IPCC). ACE’s “conceptual framework for attribution activities” would be: “elevated in priority and visibility, leading to substantial increases in resources (funds, people and computers).” Everyone had to sing from the same song-sheet: A consistent use of terminology and close collaborative international teamwork will be required to maintain an authoritative voice when explaining complex multi-factorial events such as the recent Australian bushfires”.

Three years later, Dr Peter Stott, now the Hadley Centre’s head of climate monitoring and attribution, again stressed the importance of reining in mavericks and having a unified “authoritative voice”; this time in a conference paper. “Unusual or extreme weather and climate-related events are of great public concern and interest,” he noted, “yet there are often conflicting messages from scientists about whether such events can be linked to climate change”

All too often the public receives contradictory messages from reputable experts. If the public hears that a particular weather event is consistent with climate change they may conclude that it is further proof of the immediate consequences of human-induced global warming. On the other hand, if the public hears that it is not possible to attribute an individual event, they may conclude that the uncertainties are such that nothing can be said authoritatively about the effects of climate change as actually experienced.”

Do not confuse them with chatter about uncertainties. Imagine the furor if too many suspect that nothing “can be said authoritatively about climate change”.

Yes, change is what the planet’s climate and weather do and have always done; but we can’t tell them it’s impossible to make predictions, given all the complexity.

As for seeing EWEs as having anything other than a human cause, WWA, ACE, and Net Zero advocates prefer to look the other way. They are determined to ensure no “conflicting messages” emerge about “climate change”.

This influential 2020 paper (ten authors) - A protocol for probabilistic extreme event attribution analyses - actually includes tips on how to “successfully communicate an attribution statement”.

The eighth and final step in the extreme event attribution analysis is the communication of the attribution statement. All communication operations require communication professionals… Communication here concerns writing a scientific report, a more popular summary, targeted communication to policymakers, and a press release. We found that the first one is always essential; which of the other three are produced depends on the target audiences. For all results it is crucial that during this chain the information is translated correctly into the different stages. This sounds obvious, but in practice it can be hard to achieve.

For struggling communicators, the authors offer some helpful suggestions:

A 1-page summary in non-scientific language may be prepared for local disaster managers, policymakers, and journalists with the impacts, the attribution statement, and the vulnerability and exposure analysis, preferably with the outlook to the future if available. The local team members and other stakeholders in the analysis can be invited to be points of contact for anyone seeking further clarification of contextual information, or they may be brought closer into the project team to collaborate and communicate key attribution findings.

The press release:

should contain understandable common language. Furthermore, we found that after inserting quotes from the scientists that performed the analysis, people gain more confidence in the results. This may include accessible graphics, such as the representation [at right] of the change in intensity and probability of very mild months in the high Arctic as observed in November-December 2016, (van Oldenborgh et al., 2016a).

Social media:

It “can be used to amplify the spread of attribution findings and contribute to public discourse on the extreme event being studied. Social media can help to reach younger audiences (Hermida et al., 2012; Shearer and Grieco, 2019; Ye et al., 2017).

Social media monitoring and analytics can also be used to assess awareness and the spread of attribution findings” (Kam et al., 2019.

As for the text, WWA noted some intriguing “research into the efficacy of different ways to communicate results and uncertainties to a large audience.”

For instance, van der Bles et al. (2018) found that a numerical uncertainty range hardly decreases trust in a statement, whereas a language qualification does decrease it significantly. We also found that communicating only a lower bound, because it is mathematically better defined in many cases, is not advisable. In the first place a phrase like “at least” was found to be dropped in the majority of popular accounts. Secondly, quoting only the lower bound de-emphasizes the most likely result and therefore communicates too conservative an estimate(Lewandowsky et al., 2015).

What goes around comes around. Here we have a paper by cognitive psychologist, Professor Stephan Lewandowsky, et al., Seepage: Climate change denial and its effect on the scientific community

Vested interests and political agents have long opposed political or regulatory action in response to climate change by appealing to scientific uncertainty. Here we examine the effect of such contrarian talking points on the scientific community itself. We show that although scientists are trained in dealing with uncertainty, there are several psychological reasons why scientists may nevertheless be susceptible to uncertainty-based argumentation, even when scientists recognize those arguments as false and are actively rebutting them.

If real uncertainty - the alleged driver of Lewandowsky’s “seepage” and “ambiguity aversion” - has “arguably contributed to a widespread tendency to understate the severity of the climate problem”, and indeed to question its alleged severity, is it not a better outcome to pervasive confirmation bias and a multi-trillion dollar heist?

Nature is tricky too, and indifferent to our attempts to understand and control it. While the extreme summer heatwave was affecting the Pacific Northwest of North America last month, “global warming” apparently took a winter vacation in continental Antarctica.

Antarctica New Zealand, (ANZ), the government agency responsible for that country’s activities in Antarctica, issued this media statement on 16 June 2021:

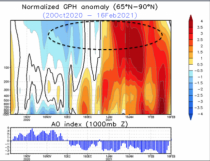

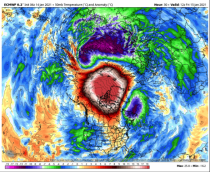

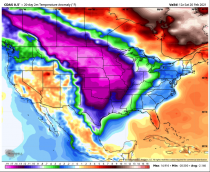

This winter Antarctica is freezing, no surprises there - but it’s colder than usual. As midwinter approaches on Monday, Antarctica is two degrees away from recording its coldest temperature ever! According to ANZ’s Chief Scientific Advisor, Professor John Cottle: This week the temperature at (Dome Fuji Station) that[s (2400km) away from Scott Base plunged to -81.7C (the record is -83.0C), These temperatures are being caused by positive SAM (Southern Annular Mode) and a strong polar vortex.

It’s good news for this year’s sea ice, and will mean lots of sea ice growth. Sea ice is frozen ocean water that floats on top of the sea. Dome Fuji Station is 3,810 meters above sea level and located on the second-highest summit of the East Antarctic ice sheet, at 77.30S 37.30′E. Antarctica’s coldest recorded temperature at ground level is -89.6C at Vostok station on 21 July 1983, but the Dome Fuji reading last month is close.

One swallow does not a summer make, of course, nor do a few unusually cold—or hot - days say much, if anything, about “climate change”.

Antarctica’s hottest day? Not so fast. Ironically, a few days ago, on July 1, 2021, the World Meteorological Organization (WMO) recognized a new record high temperature for the Antarctic “continent” of 18.3 Celsius on 6 February 2020 at the Esperanza station (Argentina). (See the latest online issue of the Bulletin of the American Meteorological Society.)

The Antarctic Peninsula (the northwest tip near to South America) is among the fastest-warming regions of the planet, almost 3C over the last 50 years. This new temperature record is therefore consistent with the climate change we are observing.

Yet temperatures at the “northwest tip near South America” tell us next to nothing about the Antarctic continent itself, but that’s another story.

WMO’s expert committee stressed the need for increased caution on the part of both scientists and the media in releasing early announcements of this type of information. This is due to the fact that many media and social media outlets often tend to sensationalize and mischaracterize potential records before they have been thoroughly investigated and properly validated.

If only.

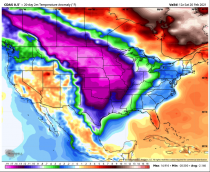

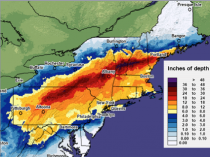

See 2021 a Wild Year Weatherwise and just Half Done! which recaps the Northwest Heat Wave and February Polar Vortex into the deep south.

----------

The Golden State is entering yet another severe dry season and the state government is responding by draining all the reservoirs and dumping all that precious fresh water straight into the ocean.

Shocking new reports indicate that over the past several weeks, the California State Water Board has routed at least 90 percent of Delta inflow right into the sea, leaving farmers with no water to grow their crops.

“It’s equal to a year’s supply of water for 1 million people,” tweeted Central Valley farmer Kristi Diener, along with the hashtag “#ManMadeDrought.”

While California’s reservoirs are designed to hold up to five years’ worth of fresh water for the state’s water needs - and had been filled to capacity back in June 2019 - the state’s water overseers are now flushing all that water down the toilet, essentially.

According to Diener, who is both a farmer and a water expert, fresh water is being unnecessarily dumped from California’s reservoirs at a time when it is most needed, which bodes ominous for the coming summer season.

“Are we having a dry year? Yes,” Diener says.

“That is normal for us. Should we be having water shortages in the start of our second dry year? No. Our reservoirs were designed to provide a steady five-year supply for all users, and were filled to the top in June 2019.”

A data chart from the California Data Exchange Center shows that back in 2019, all of the state’s reservoirs were well over capacity. Now, they are all being drained for some unknown reason.

“You’re looking at our largest reservoirs less than two years ago,” Diener says about the chart.

“They were absolutely teeming with water from 107% to 145% of average! Our reservoirs held enough water for everyone who relies on them for their water supply, for 7 years. We are barely into our second dry year. WHERE DID IT GO?”

There would be no water shortages in California if the government stopped draining all the fresh water into the ocean. According to the California Legislative Analyst’s Office, statewide water usage averages around 85 gallons per day per person. This is not very much, and yet urban and residential users are routinely ordered to conserve as much water as possible by letting their lawns turn brown and die, avoid taking showers, and washing their clothes more infrequently.

Meanwhile, the state government has opened up the dams to let all the fresh water in reserve flow right into the ocean. It is akin to turning on your faucet and letting it run all day, every day, except your faucet is the size of a canyon.

The hypocrisy is stunning, and yet too few Californians seem to be aware of what is taking place right underneath their noses. There is simply no reason for all this fearmongering about water shortages when the state government is wasting all the water that would otherwise be available in abundance.

“Before our magnificent reservoir projects were built, California never had a steady and reliable supply of water. Now water is being managed as if those reserves don’t exist, by emptying the collected water from storage to the sea, rather than saving it for our routinely dry years,” Diener says.

“Our water projects were designed to be managed for the long term providing a minimum five year supply, but California has now put us on track to have a man-made drought crisis every time we don’t have a wet season.”

As for Gov. Gavin Newsom’s $5.1 billion so-called “drought response” package, it does absolutely nothing to fix the problem. All it does is pay off water bills that were made too expensive by the state’s intentional water scarcity scheme.

To learn more about how the state government of California has weaponized the water supply to control the population, visit WaterWars.news.

Sources for this article include: CaliforniaGlobe.com and NaturalNews.com

When asked if it was true, Anthony Watts who runs WUWT from there - a man who knows says “Note:Unfortunately, yes. It’s all about this fish called the ”Delta Smelt”. Which they’ve only found four of since 2018 after thousands of surveys. Essentially, it is extinct even after dumping billions of gallons of water to “save it” year after year. Madness, total effing madness. Todd Fichette, Associate Editor, Western Farm Press - Farm Progress added Lake Oroville will likely dry up this summer. You’ll remember Oroville Dam from early 2017 when the lake overflowed after the main spillway failed. That forced the evacuation of 250,000 people in the Sacramento Valley as they feared Oroville Dam would fail. Oroville is an earthen dam. Oroville Dam also produces hydroelectricity for about 800,000 homes. That will cease later this summer when the lake falls too low to move water though the generating facility. It’s not just a water problem -the Western grid will suffer. That power’s going to have to be made up from somewhere. The drought out West is real. The Colorado River system is in peril. LA is pumping water as fast as it can from the Colorado River because California ceased deliveries to water users through the State Water Project. Lake Mead is said to be lower today than it’s been since shortly after they began filling the reservoir after World War II. The battle right now in California is to hold some water back in Shasta Lake (northern California) for cool-pool releases later this summer for salmon runs in the Sacramento River. That water may be too warm as that lake too is drying up.

California trails only 1976/77, 1924/25 and is close to 2014/15 for dryness since 1920. Note 2016/17 was 4th wettest.

A highly qualified and experienced climate modeler with impeccable credentials has rejected the unscientific bases of the doom-mongering over a purported climate crisis. His work has not yet been picked up in this country, but that is about to change. Writing at the Australian site Quadrant, Tony Thomas introduces the English-speaking world to the truth-telling of Dr. Mototaka Nakamura (hat tip: Andrew Bolt, John McMahon).

There’s a top-level oceanographer and meteorologist who is prepared to cry “Nonsense!” on the “global warming crisis” evident to climate modelers but not in the real world. He’s as well or better qualified than the modelers he criticizes - the ones whose Year 2100 forebodings of 4deg C warming have set the world to spending $US1.5 trillion a year to combat CO2 emissions.

The iconoclast is Dr. Mototaka Nakamura. In June he put out a small book in Japanese on “the sorry state of climate science”. “It’s titled Confessions of a climate scientist: the global warming hypothesis is an unproven hypothesis”, and he is very much qualified to take a stand. From 1990 to 2014 he worked on cloud dynamics and forces mixing atmospheric and ocean flows on medium to planetary scales. His bases were MIT (for a Doctor of Science in meteorology), Georgia Institute of Technology, Goddard Space Flight Centre, Jet Propulsion Laboratory, Duke and Hawaii Universities and the Japan Agency for Marine-Earth Science and Technology. He’s published about 20 climate papers on fluid dynamics.

Today’s vast panoply of “global warming science” is like an upside down pyramid built on the work of a few score of serious climate modelers. They claim to have demonstrated human-derived CO2 emissions as the cause of recent global warming and project that warming forward. Every orthodox climate researcher takes such output from the modelers’ black boxes as a given.

Dr. Nakamura has just made his work available to the English-speaking world:

There was no English edition of his book in June and only a few bits were translated and circulated. But Dr. Nakamura last week offered via a free Kindle version his own version [sic] in English. It’s not a translation but a fresh essay leading back to his original conclusions. See an English translation and interview here.

And the critique he offers is comprehensive.

Data integrity

(AT just published the story of Canada’s Environment Agency discarding actual historical data and substituting its models of what the data should have been, for instance.)

Now Nakamura has found it again, further accusing the orthodox scientists of “data falsification” by adjusting previous temperature data to increase apparent warming “The global surface mean temperature-change data no longer have any scientific value and are nothing except a propaganda tool to the public,” he writes.

The climate models are useful tools for academic studies, he says. However, “the models just become useless pieces of junk or worse (worse in a sense that they can produce gravely misleading output) when they are used for climate forecasting.” The reason:

These models completely lack some critically important climate processes and feedbacks, and represent some other critically important climate processes and feedbacks in grossly distorted manners to the extent that makes these models totally useless for any meaningful climate prediction.

I myself used to use climate simulation models for scientific studies, not for predictions, and learned about their problems and limitations in the process.

Ignoring non-CO2 climate determinants

Climate forecasting is simply impossible, if only because future changes in solar energy output are unknowable. As to the impacts of human-caused CO2, they can’t be judged “with the knowledge and technology we currently possess.”

Other gross model simplifications include

* Ignorance about large and small-scale ocean dynamics

* A complete lack of meaningful representations of aerosol changes that generate clouds.

* Lack of understanding of drivers of ice-albedo (reflectivity) feedbacks: “Without a reasonably accurate representation, it is impossible to make any meaningful predictions of climate variations and changes in the middle and high latitudes and thus the entire planet.”

* Inability to deal with water vapor elements

* Arbitrary “tunings” (fudges) of key parameters that are not understood

Concerning CO2 changes he says,

I want to point out a simple fact that it is impossible to correctly predict even the sense or direction of a change of a system when the prediction tool lacks and/or grossly distorts important non-linear processes, feedbacks in particular, that are present in the actual system…

...The real or realistically-simulated climate system is far more complex than an absurdly simple system simulated by the toys that have been used for climate predictions to date, and will be insurmountably difficult for those naive climate researchers who have zero or very limited understanding of geophysical fluid dynamics. I understand geophysical fluid dynamics just a little, but enough to realize that the dynamics of the atmosphere and oceans are absolutely critical facets of the climate system if one hopes to ever make any meaningful prediction of climate variation.

Solar input, absurdly, is modeled as a “never changing quantity”. He says, “It has only been several decades since we acquired an ability to accurately monitor the incoming solar energy. In these several decades only, it has varied by one to two watts per square meter. Is it reasonable to assume that it will not vary any more than that in the next hundred years or longer for forecasting purposes? I would say, No.”

There is much, much more. Read the whole thing.

The changes we have discussed are beginning to accelerate. We need to start pressing our politicians, media, educators to move away from the dangerous policies often driven by personal greed as much as ideology,

American Energy Alliance (4/28/21) release: “As the Biden Administration attempts to make good on all of its recent proclamations with respect to global warming and renewable energy, the American Energy Alliance today released the results of a nationwide survey conducted in February of 1,000 voters (3.1% margin of error). The topline results of the survey, conducted by MWR Strategies, are included here.

The results indicate that voters want and expect minimal federal involvement in the energy sector. This sentiment is driven partly by cost considerations, partly by lack of trust in the government’s competence or its intentions, and partly by a strong and durable belief in the efficacy of private sector action. Specific response sets include: Voters don’t want to pay to either address climate change or increase the use of renewable energy. There continues to be limited appetite to pay to address climate change.

When asked what they would be willing to pay each year to address climate change, the median response from voters was 20 dollars. That is very similar to answers we have received to this question over the last few years, which suggests that climate change - despite the rhetoric - has stalled as an issue for most Americans.”

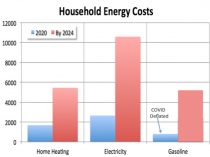

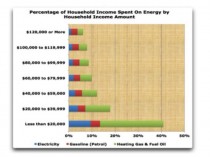

As we show below, the average annual household cost for energy for the Biden/Kerry/AOC is 600 times that level per year and we lose not only energy independence but reliability. This does not include the cost of retrofitting (replacing your cars with electric vehicles, doing away with gas stoves, propane or oil furnaces that get us through the winter). And it feeds soaring for every commodity - food, goods and services. As prices skyrocket (a hidden tax on everyone), wages may edge up but jobs will fall by the wayside. It is a path that leads to energy poverty for those who can least afford it, mainly the low and middle income families and retirees on fixed income. We are already seeing inflation explode.

And all because they have invented an enemy that is actually nature’s greatest benefactor.

Gasoline prices have risen about 35 cents a gallon on average over the last month, according to the AAA motor club, and it is believed could reach $4 a gallon in some states by summer, when many were hoping they could travel again. I just got my first ever $500 ($518) heating oil bill and we keep our thermostat at 66F and have a well insulated home (R5 siding!).

Our government, most every company and the media are talking carbon reduction, even capture. Do we really have a carbon problem?

Ignored Reality about Air Pollution

In the post war boom, we had problems with air pollution from factories, coal plants, cars/trucks, inefficient home heating systems and incinerators in apartments. We had air quality issues with pollutants like soot, SO2, ozone, hydrocarbons, NOx, and lead. We set standards that had to be met by industry and automakers. We have the cleanest air in my lifetime and in the world today.

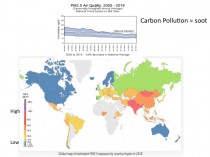

Carbon pollution is not C02 but small particulates (PM2.5). See how US levels have seen it diminish 43% well below the goals we set. The U.S., Scandinavia and Australia have the lowest levels in the world - compare with China, Mongolia and India!

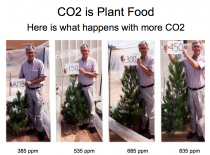

CO2 is a valuable plant fertilizer. We pump CO2 into greenhouses.

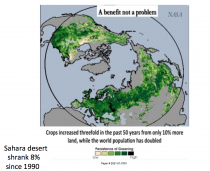

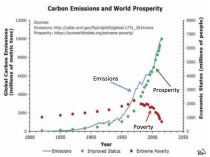

It has caused global greening - the Sahara has shrunk 8% since 1990.

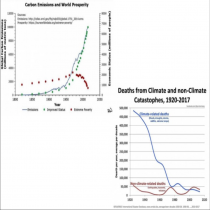

Global prosperity has improved with increased CO2 while poverty declined.

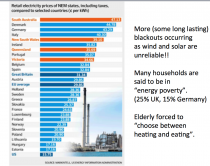

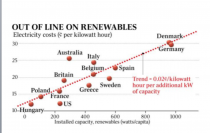

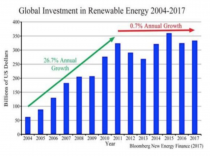

Green carbon taxes or reduction cause electricity prices to rise.

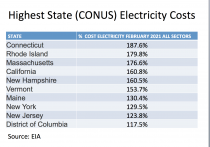

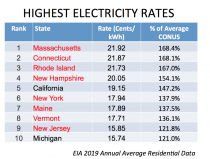

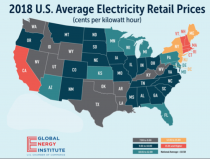

See the RGGI northeast states join crazy green California. The chart shows the average cost of electricity as a percent of the 48 state average.

Under green friendly Biden/Kerry it will get worse. Chamber of Commerce Global Energy Institute’s Energy Accountability Series 2020 projects:

Energy prices would skyrocket under a fracking ban and would be catastrophic for our economy. If such a ban were imposed in 2021, by 2025 it would eliminate 19 million jobs and reduce U.S. Gross Domestic Product (GDP) by $7.1 trillion. Natural gas prices would leap by 324 percent, causing household energy bills to more than quadruple. By 2025, motorists would pay twice as much at the pump ($5/gallon).

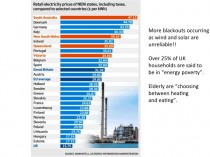

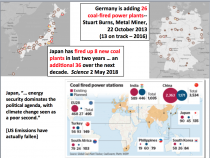

This will lead to soaring energy prices and life-threatening blackouts.

The green countries worldwide have electricity prices three times our levels. We are at the very bottom of the list. But for how long?

If I were to apply the Chamber’s projections to my modest family home and lifestyle, we would pay much more for energy ($13,000 per year!).

Some believe the Obama/Chu goal of $8/gallon gas price could be revived and may even be targeted at $10 which would increase household energy costs another $3000/year. That would not include the cost of forced abandonment of gas-powered vehicles for electric autos, fuel oil or natural gas heating and cooling for electric.

That would be about what many families bring home yearly ($20,000). Not to worry...big government would come to the rescue by subsidizing lower or no income families and adding more to the exploding tax burden for everyone else.

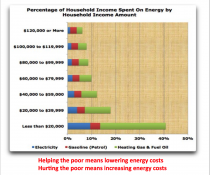

Soaring energy prices would like wherever this green energy frenzy is forced, will lead to life-threatening blackouts (remember Texas in February!). Retirees and lower income families paid the highest % of income (over 40%) for energy now with energy costs low in 2020. They would suffer the worst as other parts of the world learned.

Millions perhaps tens of millions of people working hard to keep you warm in winter and cool in summer will be out of a job. These people helped make the US energy independent for the first time ever. The talk of green jobs is nonsense as Europe found out here. New York Post (4/9/21) reports: “Last week, the Biden administration announced ‘a bold set of actions’ that it said will ‘catalyze’’ the installation of 30,000 megawatts of new offshore wind capacity by 2030.

A White House fact sheet claimed the offshore push will create ‘good-paying union jobs’ and ‘strengthen the domestic supply chain.’ One problem: It didn’t contain a single mention of electricity prices or ratepayers. The reason for the omission is obvious: President Biden’s offshore-wind scheme will be terrible for consumers. If those 30,000 megawatts of capacity get built - which, given the history of scuttled projects like Cape Wind, is far from a sure thing - that offshore juice will cost ratepayers billions of dollars more per year than if that same power were produced from onshore natural-gas plants or advanced nuclear reactors...the cost issue is the one that deserves immediate attention because any spike in electricity prices will have an outsized impact on low- and middle-income consumers. Those price hikes will be particularly painful in New York and New England, where consumers already pay some of America’s highest electricity prices...Thus, the electricity from 30,000 megawatts of offshore wind could cost consumers roughly $7.6 billion more per year than if it came from advanced nuclear reactors and about $11.1 billion more than if it were produced from gas-fired generators.”

By the way, perversely, when families can’t afford to pay for the energy (heating oil, gas or electricity) to heat their homes in winter, they revert to burning wood. This introduces the particulate matter and other ‘pollutants’ we have worked so hard to remove at the source.

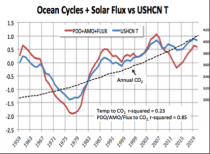

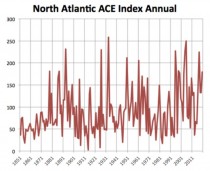

Whatsmore we have shown that when we factor in natural cycles in the oceans, on the sun and volcanism, we can explain all the variability the last century (see) meaning CO2 has no effect. We also see no non-natural changes in extremes of weather.

Francis Menton, Manhattan Contrarian

As readers at this site are well aware, the field of climate “science” and alarmism is subject to an extraordinary degree of orthodoxy enforcement, where all information supporting the official narrative gets enthusiastically promoted, while all information disagreeing with the official narrative gets suppressed or attacked. For just one recent example of the latter, see the Wall Street Journal editorial in the current weekend edition reporting on a bogus Facebook “fact check” of the Journal’s recent review of Steven Koonin’s new book “Unsettled.”

In this context, an article just out on May 6 in the journal Science is truly remarkable. The article is titled “Does ocean acidification alter fish behavior? Fraud allegations create a sea of doubt.” It has the byline of Martin Enserink, Science’s international news editor. Science has a long history of publishing every sort of climate alarmism, and of being an unreceptive forum for anything expressing any sort of skepticism, let alone alleging fraud in claims of climate alarm. Something serious must be going on here.

But what if the warming doesn’t happen, or turns out to be far less than the fear-mongers have projected? That’s where “ocean acidification” comes in. “Ocean acidification” is the one allegedly harmful effect of rising atmospheric CO2 that does not stem initially from warming temperatures. Instead, the idea is that increasing CO2 in the atmosphere will somewhat increase the level of CO2 dissolved in the oceans, which in turn will lower the pH of the oceans. How much? Some projections suggest at the extreme end that average ocean pH may go down from a current 8.1 or so, all the way to maybe about 7.75 by 2100. If you know anything about this subject, or maybe took high school chemistry, you will know that pH of 7 is neutral, lower than 7 is acidic, and above 7 is basic. Thus a pH of 8.1 is not acidic at all, but rather (a little) basic; and a pH of 7.75 is somewhat less basic. The fact that anyone would try to apply the scary term of “ocean acidification” to this small projected shift toward neutrality already shows you that somebody is trying to manipulate the ignorant.

See what happens next New Scientific Scandal.

------------

Ever Deeper And Deeper Into “Climate” Fantasy

April 24, 2021/ Francis Menton

It never ceases to amaze me how the very mention of the word “climate” causes people to lose all touch with their rational faculties. And of course I’m not talking here just about the ordinary man on the street, but also, indeed especially, about our elected leaders and government functionaries.

The latest example is President Biden’s pledge, issued at his “World Climate Summit” on April 22, to reduce U.S. greenhouse gas (GHG) emissions by 50 - 52% from the levels of 2005, and to do so by 2030.

In my last post a couple of days ago, I remarked that “Biden himself has absolutely no idea how this might be accomplished. And indeed it will not be accomplished.” Those things are certainly true, but also fail to do full justice to the extent to which our President and his handlers have now left the real world and gone off into total fantasy.

---------

Biden And Kerry Get Humiliated On Earth Day, But Are Too Dumb To Realize It

April 22, 2021/ Francis Menton

Once again, it’s Earth Day. The first such day was 51 years ago, April 22, 1970.

Since that first one, Earth Day has served as an annual opportunity for sanctimonious socialist-minded apocalypticists to issue prophesies of imminent environmental doom. Here from the Competitive Enterprise Institute is a great list of some 50 or so such predictions uttered since the late 1960s, all of which have since been proven wrong. Interestingly, the ones from the time of the first Earth Day mostly concerned overpopulation, famine, and global cooling. Today, those things seem ever so quaint.

Somewhere along the line, the prophesy of a coming ice age faded away, and global warming surged forth as the much more fashionable doomsday prediction. Today, fealty to the global warming apocalypse orthodoxy is a prerequisite for admission to polite society. Our President goes around repeating the mantra that climate change is an “existential threat,” even as he signs Executive Orders and re-directs half the energies of the vast federal government to fight it.

And what better opportunity than the annual return of Earth Day for our great leaders to demonstrate their deep climate change sincerity? Thus last week we had President Biden’s personal climate emissary to the world, John Kerry, traveling to Shanghai to triumphantly welcome China on board with the official plan to “save the planet” through ending the use of fossil fuels; and today, Biden himself has followed up with his Earth Day Climate Summit, a virtual event said to be attended by leaders of some 40 or so nations, including the likes of China, India and Russia. Surely, things have now completely changed course since the evil Trump has been banished, and the world will shortly be saved by the re-invigoration of the glorious Paris Climate Agreement.

JUST IN

See the history by Paul Homewood here

Marc Morano’s ’Green Fraud‘ blockbuster book is also a must read.

AOC’s chief of staff Saikat Chakrabarti revealed that the Green New Deal was not about climate change. The Washington Post reported Chakrabarti’s unexpected disclosure in 2019. “The interesting thing about the Green New Deal,” he said, “is it wasn’t originally a climate thing at all.” He added, “Do you guys think of it as a climate thing? Because we really think of it as a how-do-you-change-the-entire economy thing. “Former Ocasio-Cortez campaign aide Waleed Shahid admitted that Ocasio-Cortez’s GND was a “proposal to redistribute wealth and power from the people on top to the people on the bottom.”

---------

This echoed IPCC official Ottmar Edenhofer who in November 2010 admitted “one has to free oneself from the illusion that international climate policy is environmental policy.” Instead, climate change policy is about how “we redistribute de facto the world’s wealth.” UN Climate Chief Christiana Figueres said “Our aim is not to save the world from ecological calamity but to change the global economic system (destroy capitalism).”

The evidence about the fraud is mounting but don’t expect coverage in the media. A must see A CLIMATE AND ENERGY PRIMER FOR POLITICIANS AND MEDIA by contributing author Allan MacRae Allan is a highly respected energy leader in Canada who has worked with true climate experts and has helped make Alberta the shining star of Canadian energy over the decades. There too the politicians and media and the public have been programmed by false claims and green energy frauds and are willing to toss the low cost clean fossil fuel energy legacy out and replace it with unreliable and expensive green energy.

Greens expect population to adapt its consumption to the available supply and simply come to accept rationing and power interruptions, of the sort that are unfortunately still common in underdeveloped countries. They insist that is the necessary price for averting the phoney climate apocalypse.

See also Stephen Moores’ Follow the Climate Change Money here. This is something Eisenhower warned about in his Farewell address:

“The prospect of domination of the nation’s scholars by Federal employment, project allocations, and the power of money is ever present - and is gravely to be regarded.”

----------

COLLEGE OF ENGINEERING, CARNEGIE MELLON UNIVERSITY

For decades, climate change researchers and activists have used dramatic forecasts to attempt to influence public perception of the problem and as a call to action on climate change. These forecasts have frequently been for events that might be called “apocalyptic,” because they predict cataclysmic events resulting from climate change.

In a new paper published in the International Journal of Global Warming, Carnegie Mellon University’s David Rode and Paul Fischbeck argue that making such forecasts can be counterproductive. “Truly apocalyptic forecasts can only ever be observed in their failure--that is the world did not end as predicted,” says Rode, adjunct research faculty with the Carnegie Mellon Electricity Industry Center, “and observing a string of repeated apocalyptic forecast failures can undermine the public’s trust in the underlying science.”

Rode and Fischbeck, professor of Social & Decision Sciences and Engineering & Public Policy, collected 79 predictions of climate-caused apocalypse going back to the first Earth Day in 1970. With the passage of time, many of these forecasts have since expired; the dates have come and gone uneventfully. In fact, 48 (61%) of the predictions have already expired as of the end of 2020.

Fischbeck noted, “from a forecasting perspective, the ‘problem’ is not only that all of the expired forecasts were wrong, but also that so many of them never admitted to any uncertainty about the date. About 43% of the forecasts in our dataset made no mention of uncertainty.”

In some cases, the forecasters were both explicit and certain. For example, Stanford University biologist Paul Ehrlich and British environmental activist Prince Charles are serial failed forecasters, repeatedly expressing high degrees of certainty about apocalyptic climate events.

Rode commented “Ehrlich has made predictions of environmental collapse going back to 1970 that he has described as having ‘near certainty’. Prince Charles has similarly warned repeatedly of ‘irretrievable ecosystem collapse’ if actions were not taken, and when expired, repeated the prediction with a new definitive end date. Their predictions have repeatedly been apocalyptic and highly certain...and so far, they’ve also been wrong.”

The researchers noted that the average time horizon before a climate apocalypse for the 11 predictions made prior to 2000 was 22 years, while for the 68 predictions made after 2000, the average time horizon was 21 years. Despite the passage of time, little has changed--across a half a century of forecasts; the apocalypse is always about 20 years out.

Fischbeck continued, “It’s like the boy who repeatedly cried wolf. If I observe many successive forecast failures, I may be unwilling to take future forecasts seriously.

That’s a problem for climate science, say Rode and Fischbeck.

“The underlying science of climate change has many solid results,” says Fischbeck, “the problem is often the leap in connecting the prediction of climate events to the prediction of the consequences of those events.” Human efforts at adaptation and mitigation, together with the complexity of socio-physical systems, means that the prediction of sea level rise, for example, may not necessarily lead to apocalyptic flooding.

“By linking the climate event and the potential consequence for dramatic effect,” noted Rode, a failure to observe the consequence may unfairly call into question the legitimacy of the science behind the climate event.”

With the new Biden administration making climate change policy a top priority, trust in scientific predictions about climate change is more crucial than ever, however scientists will have to be wary in qualifying their predictions. In measuring the proliferation the forecasts through search results, the authors found that forecasts that did not mention uncertainty in their apocalyptic date tended to be more visible (i.e., have more search results available). Making sensational predictions of the doom of humanity, while scientifically dubious, has still proven tempting for those wishing to grab headlines.

The trouble with this is that scientists, due to their training, tend to make more cautious statements and more often include references to uncertainty. Rode and Fischbeck found that while 81% of the forecasts made by scientists referenced uncertainty, less than half of the forecasts made by non-scientists did.

“This is not surprising,” said Rode, “but it is troubling when you consider that forecasts that reference uncertainty are less visible on the web. This results in the most visible voices often being the least qualified.”

Rode and Fischbeck argue that scientists must take extraordinary caution in communicating events of great consequence. When it comes to climate change, the authors advise “thinking small.” That is, focusing on making predictions that are less grandiose and shorter in term. “If you want people to believe big predictions, you first need to convince them that you can make little predictions,” says Rode.

Fischbeck added, “We need forecasts of a greater variety of climate variables, we need them made on a regular basis, and we need expert assessments of their uncertainties so people can better calibrate themselves to the accuracy of the forecaster.”

----------------

Note: Mark Perry on Carpe Diem here listed 18 major failures.

Here he worked with CEI to compile 50 predictions of catastrophes that failed here.

“Most of the people who are going to die in the greatest cataclysm in the history of man have already been born,” wrote Paul Ehrlich in a 1969 essay titled “Eco-Catastrophe! “By...[1975] some experts feel that food shortages will have escalated the present level of world hunger and starvation into famines of unbelievable proportions. Other experts, more optimistic, think the ultimate food-population collision will not occur until the decade of the 1980s.”

Erlich sketched out his most alarmist scenario for the 1970 Earth Day issue of The Progressive, assuring readers that between 1980 and 1989, some 4 billion people, including 65 million Americans, would perish in the “Great Die-Off.”

“It is already too late to avoid mass starvation,” declared Denis Hayes, the chief organizer for Earth Day, in the Spring 1970 issue of The Living Wilderness.

Leonard Nimoy talked about the coming ice age in 1979 here.

Tony Heller exposes the fraudsters here:

Note the story below in the USA TODAY by Jason Hayes is worth a read. See also this excellent summary by Chris Martz.

Common-sense has already lost to political considerations - and people across Texas and the Great Plains are paying the price.

Jason Hayes Opinion contributor

It’s not just a cold front. Over a decade of misguided green energy policies are wreaking havoc in Texas and the lower Midwest right now - despite non-stop claims to the contrary.

The immediate cause for the power outages in Texas this week was extreme cold and insufficient winterization of the state’s energy systems. But there’s still no escaping the fact that, for years, Texas regulators have favored the construction of heavily subsidized renewable energy sources over more reliable electricity generation. These policies have pushed the state away from nuclear and coal and now millions in Texas and the Great Plains states are learning just how badly exposed they are when extreme weather hits.

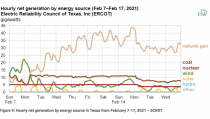

Renewable’s defenders retort that Texas’ wind resource is “reliably unreliable.” Translation: It can’t be counted on when it’s needed most. The state has spent tens of billions of dollars on wind turbines that don’t work when millions of people desperately need electricity. As the cold weather has gotten worse, half the state’s wind generation has sat frozen and immobile. Where wind provided 42% of the state’s electricity on Feb. 7, it fell to 8% on Feb.11.

The Texas power outage was inevitable

Unsurprisingly, the failure of wind has sparked a competing narrative that fossil fuel plants were the real cause of power outages. This claim can be quickly dispelled with a look at data from ERCOT, the state’s electricity regulator. Even though the extreme cold had frozen cooling systems on coal plants and natural gas pipelines, the state’s coal plants still upped their output by 47% in response to increasing demand. Natural gas plants across the state increased their output by an amazing 450%. Fossil fuels have done yeoman’s work to make up for wind’s reliable unreliability.

Sadly, even these herculean efforts weren’t enough. The loss of wind has been compounded by the loss of some natural gas and coal generation, and one nuclear reactor, which experienced a cold-related safety issue and shut down. Things are improving, but rolling power outages are still impacting millions. Had the state invested more heavily in nuclear plants instead of pushing wind power, Texans would have ample, reliable, safe, emission-free electricity powering their lives through the cold. Instead, over 20 have died.

This sad outcome was inevitable. Renewable energy sources have taken off in popularity largely because of state mandates and federal subsidies. As they’ve become more popular, reliable energy like nuclear power and coal have felt the squeeze.

Last year, wind overtook coal as Texas’ second largest source of electricity generation. The most recent federal data indicates that, in October last year, natural gas provided 52% of the Lonestar state’s electricity, while wind generated about 22%, coal kicked in 17%, and nuclear added 8%. The rise of wind means unreliable energy is increasingly relied on for the energy grid.

Texas rolled by winter storms:A dispatch from my frozen living room

This won’t be the last power crisis

The Texas energy crisis isn’t a one-off, either. The same thing happened in 2019 when Michigan endured the Polar Vortex. Extreme cold paired with limited natural gas supplies and non-existent renewable energy. Residents all received text messages warning them to reduce their thermostats to 65F or less to stave off a system wide failure.

California’s rolling blackouts last summer are another example. Dwindling solar generation in late afternoon, shuttered nuclear plants, and insufficient supply from gas plants could not compete with rising energy demands due to extreme heat.

And then there’s Joe Biden’s $2 trillion promise to wean America off reliable energy. If Biden’s aggressive climate plan is enacted, it will push tens of thousands of wind turbines and millions of solar panels in an expensive effort to achieve net zero emissions from the nation’s electricity sector by 2050. But doing this will only further spread the problems that Texans are currently experiencing.

America can’t go down this foolish road. Common-sense has already lost to political considerations - and people across Texas and the Great Plains are paying the price. They aren’t the first victims, and they certainly won’t be the last, if politicians continue to push unreliable renewable energy instead of the reliable sources families need to stay warm and live their lives. A green future shouldn’t be this dark.

Jason Hayes is the director of environmental policy at the Mackinac Center for Public Policy.

Also see A CLIMATE AND ENERGY PRIMER FOR POLITICIANS AND MEDIA by contributing author Allan MacRae Allan is a highly respected energy leader in Canada who has worked with true climate experts and has helped make Alberta the shining star of Canadian energy over the decades. There too the politicians and media and the public have been programmed by false claims and green energy frauds and are willing to toss the low cost clean fossil fuel energy legacy out and replace it with unreliable and expensive green energy. Greens expect population to adapt its consumption to the available supply and simply come to accept rationing and power interruptions, of the sort that are unfortunately still common in underdeveloped countries. They insist that is the necessary price for averting the phoney climate apocalypse.

---------

Biden was the sheriff managing the failed $800B Obama stimulus which led to 77 bankruptcies including Solyndra. The same will happen on a larger scale with the $3T plan today. People will die in our extreme climate areas.

With years of experience in Europe about big wind failures and long term issues, this story about What happens to all the old wind turbines?. Also note, solar panels contains toxic rare earth metals that can’t be disposed of at the local .. See this story

The blackouts, which have left as many as 4 million Texans trapped in the cold, show the numerous chilling consequences of putting too many eggs in the renewable basket.

By Jason Isaac, The Federalist

FEBRUARY 18, 2021

As Texans reel from ongoing blackouts at the worst possible time, during a nationwide cold snap that has sent temperatures plummeting to single digits, the news has left people in other states wondering: How could this happen in Texas, the nation’s energy powerhouse?

But policy experts have seen this moment coming for years. The only surprise is that the house of cards collapsed in the dead of winter, not the toasty Texas summers that usually shatter peak electricity demand records.

The blackouts, which have left as many as 4 million Texans trapped in the cold, show the numerous chilling consequences of putting too many eggs in the renewable basket.

Fossil Fuels Aren’t to Blame

There are misleading reports asserting the blackouts were caused by large numbers of natural gas and coal plants failing or freezing. Here’s what really happened: the vast majority of our fossil fuel power plants continued running smoothly, just as they do in far colder climates across the world. Power plant infrastructure is designed for cold weather and rarely freezes, unlike wind turbines that must be specially outfitted to handle extreme cold.

It appears that ERCOT, Texas’s grid operator, was caught off guard by how soon demand began to exceed supply. Failure to institute a managed rolling blackout before the grid frequency fell to dangerously low levels meant some plants had to shut off to protect their equipment. This is likely why so many power plants went offline, not because they had failed to maintain operations in the cold weather.

Yet these operational errors overshadow the decades of policy blunders that made these blackouts inevitable. Thanks to market-distorting policies that favor and subsidize wind and solar energy, Texas has added more than 20,000 megawatts (MW) of those intermittent resources since 2015 while barely adding any natural gas and retiring significant coal generation.

Increased Reliance on Unreliable Renewables

On the whole, Texas is losing reliable generation and counting solely on wind and solar to keep up with its growing electricity demand. I wrote last summer about how ERCOT was failing to account for the increasing likelihood that an event combining record demand with low wind and solar generation would lead to blackouts. The only surprise was that such a situation occurred during a rare winter freeze and not during the predictable Texas summer heat waves.

Yet ERCOT still should not have been surprised by this event, as its own long-term forecasts indicated it was possible, even in the winter. Although many wind turbines did freeze and total wind generation was at 2 percent of installed capacity Monday night, overall wind production at the time the blackouts began was roughly in line with ERCOT forecasts from the previous week.

We knew solar would not produce anything during the night, when demand was peaking. Intermittency is not a technical problem but a fundamental reality when trying to generate electricity from wind and solar. This is a known and predictable problem, but Texas regulators fooled themselves into thinking that the risk of such low wind and solar production at the time it was needed most was not significant.

Special Breaks Helped Cause the Blackouts

The primary policy blunder that made this crisis possible is the lavish suite of government incentives for wind and solar. They guarantee profits to big, often foreign corporations and lead to market distortions that prevent reliable generators from building the capacity we need to keep the lights on when wind and solar don’t show up.

Research by the Texas Public Policy Foundation’s Life: Powered project found that more than $80 billion of our tax dollars have been spent on wind and solar subsidies in the last decade, in federal subsidies alone. Texans are also charged an average of $1.5 billion a year in state subsidies for renewable energy.

All that cash hasn’t materially changed our energy landscape. Wind and solar still provide just 4 percent of our energy nationwide. The promise that subsidies would kickstart renewable energy technology remains unmet after more than 40 years.

Renewable advocates will be quick to point out that fossil fuels also receive subsidies from the federal government. That’s partially true, but solar companies receive 75 times more money and wind 17 times more per unit of electricity generated. Nevertheless, the best solution for Texans, and all Americans, would be to eliminate all energy subsidies and allow the free market to drive our energy choices.

As politically popular as wind and solar energy are, no amount of greenwashing can cover up their fundamental unreliability and impracticality for anything other than a supplemental energy source. Yet our government even in the oil country of Texas, home of Spindletop and the Permian Basin is designed to incentivize renewable energy projects.

Keep This from Happening Again

This week’s blackouts should be a wakeup call to politicians. Overconfidence in renewables led us uncomfortably close to total grid failure - and when the going gets tough, few things really matter to voters as much as access to electricity. Without it, scrambling for the barest necessities like food, water, and warmth becomes expensive, stressful, and all-consuming.

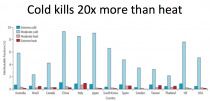

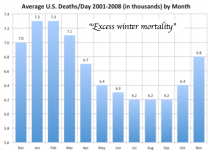

The consequences are potentially deadly. For all the talk of climate change, cold is far deadlier than heat, responsible for 20 times more deaths. Although the cold itself may not kill you - you might not literally freeze to death - it has devastating potential to exacerbate pre-existing conditions and make otherwise minor illnesses life-threatening.